How to Evaluate Which Model Is Best Based on Aic

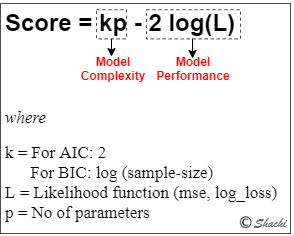

Here we have tried to understand what actually is happening inside. This method consists on fitting the ARIMA model for different values of p and q and choosing the best value based on metrics such as AIC and BIC.

The Akaike Information Criterion Aic As A Function Of Model Order Download Scientific Diagram

When the true model is in the candidate models the Probability BIC chooses the true model 1 when n.

. Only present the model with lowest AIC value. So as per the formula for the AIC score. They can be used to select the best model out of a set of two or more models as opposed.

One might consider Delta_i AIC_i AIC_rm min a rescaling transformation that forces the best model to. However developing accurate analytical models of. You will run into the same problems with multiple model comparison as you would with p-values in that you might.

The best-fit model carrying 96 of. The AIC with the minimum value denoted by AIC is then the best model. The lower the AIC the better the model.

You shouldnt compare too many models with the AIC. Library forecast for d in 01 for p in 09 for q in 09 fitArima midtsorderc pdq print paste0 AIC is AIC fit for d d p p and q q. Choose generalised linear models Type of Model then check binary logistic sub response and then the response is given in a dependent variable so one could choose predictors for predictions this step.

AIC Akaike information criterion is a metric which tells us how good a model is. 28 298686 198972 rounded to 1990. The desired result is to find the lowest possible AIC.

Model ARIMA dfvalue order i j kfit print modelaic Best AIC can easily be calcuated through libraries. The AIC and BIC can also compare non-nested models. AIC is more like to choose a more complex model for any given n.

AIC -2N LL 2 kN. The AIC also penalizes models which have lots of parameters. Take into account the number.

Akaike 1973 and Bayesian information criterion BIC. For k in range 02. So then the approach above based on AIC BIC and FIC makes sense.

When model fits are ranked according to their AIC values the model with the lowest AIC value being considered the best. In statistics AIC is used to compare different possible models and determine which one is the best fit for the data. As a result training on all the data and using AIC can result in improved model selection over traditional trainvalidationtest model selection methods.

AICc is a version of AIC corrected for small sample sizes. The delta AIC for the mth candidate model denoted by Δ m is simply the. Model selection criteria commonly used in the selection of ARIMA models namely.

AIC score 2number of parameters 2 maximized log likelihood. Based on printing the parameters along with AIC. Present all models in which the difference in AIC relative to AICmin is 2 parameter estimates or graphically.

R-Squared R² y dependent variable values y_hat predicted values from model y_bar the mean of y. The AIC value contains scaling constants coming from the log-likelihood mathcalL and so Delta_i are free of such constants. The Akaike information criterion AIC is a mathematical method for evaluating how well a model fits the data it was generated from.

Models in which the difference in AIC relative to AICmin is 2 can. The score as defined above is minimized eg. An AIC can be calculated for each candidate model denoted by AIC m m 1.

In other words it represents the strength of the fit however it does not say anything about the model itself it. BIC is less likely to choose a too complex model if n is sufficient but it is more likely for any given n to choose too small of a model. The best ARIMA models can be obtained based on the model selection criteria.

Example methods We used AIC model selection to distinguish among a set of possible models describing the relationship between age sex sweetened beverage consumption and body mass index. Model selection conducted with the AIC will choose the same model as leave-one-out cross validation where we leave out one data point and fit the model then evaluate its fit to that point for large sample sizes. Where N is the number of examples in the training dataset LL is the log-likelihood of the model on the training dataset and k is the number of parameters in the model.

Lower the value better the model. You can do it in following two ways. Models can be compared with a χ2-difference test or with informa-tion criteria like Akaikes information criterion AIC.

The R² value also known as coefficient of determination tells us how much the predicted data denoted by y_hat explains the actual data denoted by y. Predictions based on analytical performance models can be used on efficient scheduling policies in order to select adequate resources for an optimal execution in terms of throughput and response time. BIC or Bayesian information criteria is a variant of AIC with a stronger penalty for including additional variables to the model.

Even so recall that once model selection has been performed and you have ended up with a model of choice you cannot trust the significance tests anymore because they are. You can use the following methods. For j in range 02.

Step 3 - Calculating AIC. AIC works by evaluating the models fit on the training data and adding a penalty term for the complexity of the model similar fundamentals to regularization. For i in range 02.

A weird AIC value is generated when it tests. Here you will have to visually inspect which model is best which is time consuming and not a good way. Maximized Log-likelihood and the AIC score Image by Author We can see that the model contains 8 parameters 7 time-lagged variables intercept.

More technically AIC and BIC are based on different motivations with AIC an index based on what is called Information Theory which has a focus on predictive accuracy and BIC an index derived as an approximation of the Bayes Factor which is used to find the true model if it ever exists. Akaikes information criterion AIC revealed by Akaike 3 Akaikes information criterion bias -corrected AICC by Hurvich and Tsai 4 Bayesian. The model with the lowest AIC is selected.

Practically AIC tends to select a model that maybe. Report that you used AIC model selection briefly explain the best-fit model you found and state the AIC weight of the model.

Probabilistic Model Selection With Aic Bic In Python By Shachi Kaul Analytics Vidhya Medium

Goodness Of Fit Corrected Akaike Information Criterion Aic C And Download Table

Logistic Regression Model Comparison Aic Akaike S Information Download Table

No comments for "How to Evaluate Which Model Is Best Based on Aic"

Post a Comment